Move fast and save things: the case for climate analytics innovation

The scientific journal Nature recently published "Business risk and the emergence of climate analytics,” a paper criticizing the use of climate data to inform financial decision-making and disclosures. At Sust Global, we have some thoughts about this.

Overall, the warnings are appropriate - beware the snake oil salesmen! But there is still a large, achievable gap between what businesses know about climate risk (almost nothing) and what we can tell them based on the latest scientific research. Innovative technology can democratize access to this type of crucial information, and we cannot afford to wait.

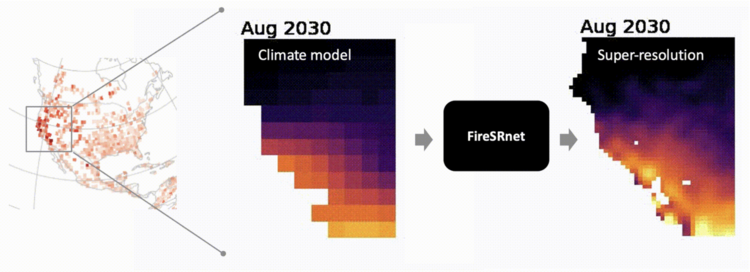

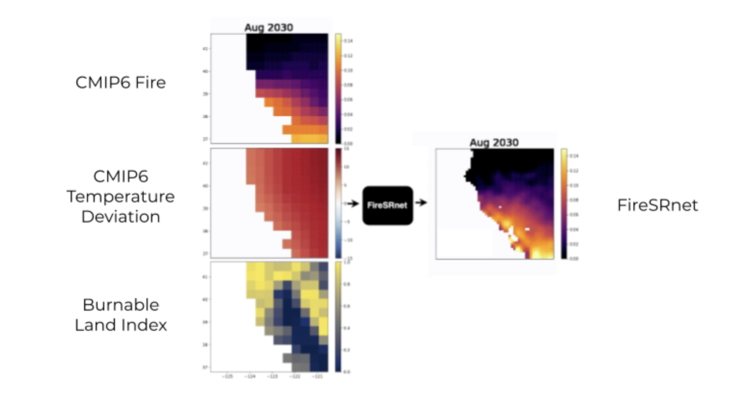

Our proprietary FireSRnet model transforms low-resolution climate exposure maps into a super-resolution map that improves resolution 8x-16x and enables asset level analytics.

Signal vs. Noise

Climate data is complex, but this type of noisy data is managed day-to-day by financial and enterprise businesses, and they deserve a bit more credit. Their analysts specialize in pulling useful information from noisy signals and incorporating it into internal risk assessments, and they will not have a difficult time grasping that some of these hazard projections have more confidence, or a stronger signal, than others. For example, temperature and heatwaves are a much clearer predictive signal than wind speeds or co-occurrences of extreme events.

Limitations of Global Climate Modelling

“While inclusion in CMIP requires the submission of specific simulations, it does not take into account the ‘skill’ of a model to represent climate phenomenon, when compared to observational data.”

An example of a Global Climate Model simulating projected fire risk.

Forward-looking Global Climate Models, or GMCs, aren’t perfect, and we won’t resolve some of the modeling limitations anytime soon. So the challenge right now is to properly convey or “translate” this information so that it is used appropriately within financial institutions. This is where the data validation processes that we undertake at Sust Global are critical. By fusing historic satellite data and observed phenomena with near-real time weather data and forward-looking climate models, we are able to deliver a 360 degree view on climate-related risk. It still isn’t going to be perfect, but we can describe the functionality and enable a more informed understanding of the science behind GCMs.

“Whether they do provide useful predictive skill remains an active area of scientific debate. Importantly, these two types of CMIP experiments—greenhouse gas forced simulations and observationally initialized simulations—are available via the ESGF but provide profoundly different insights into climate risk and on fundamentally different timescales.”

We agree that using CMIP to project precise changes over the next decade will be difficult due to natural variability from events like El Niño, which can create short-term trends. There are always risks to overselling confidence in certain hazard projections.

Model Ensembling - getting the right data

“Attempts to overcome these uncertainties through the use of ensemble averages often mask large systematic biases in individual models”

The technical team at Sust Global has been working on this recently, looking at the range of different models of acute physical hazards like fires, floods and droughts and realizing simply averaging them without bias correction would lead to inconsistent signals. For these reasons, bias correction and smart aggregation techniques for model ensembling become critical capabilities towards making the simulations from frontier climate models useful and actionable.

"Further, where analysis requires robust information around extremes at spatial scales that reflect a city-scale or agricultural region, information from GCMs should be integrated with expert judgement sourced from within the weather and climate sciences."

Our technical team is doing just this to improve our fire risk exposure offering by integrating high-resolution geospatial data sources, machine learning expertise and ground truth data into our pipeline to generate super resolution risk exposure maps.”

Super Resolution and downscaling

“GCMs are not valid tools for examining how climate will change at these [asset-level] scales and dynamical and statistical downscaling [e.g. super-resolution] does not change this assessment.”

We disagree with this statement. Global climate models provide good detail on how risk varies between sites and long-term trends. Keep in mind the authors definition of downscaling is restrictive here to a certain class of methods that do not include incorporating other expert-derived, high-res datasets like elevation data for flooding or land cover for fire. Super-resolution can be considered a statistical downscaling approach, but the difference between super resolution and standard downscaling methods is that it can incorporate high resolution data on other related variables to help enhance resolution. Coupling super-resolution with other data sources and known historical occurrences is a whole different level than downscaling alone.

We integrate geo-spatial data, machine learning and ground truth, or burnable land index to create our FireSRnet super resolution exposure maps.

Translating complex climate science into validated financial signals

“Third, the emergence of climate services requires the training of a new group of professionals, loosely termed here as ‘climate translators’. Their critical role lies in translating the complex climate information compiled from observations, meteorological reanalyses, and near- and long-term model predictions for non-expert users.”

At Sust Global, our product gives organisations the power to transform that complicated climate data into meaningful, actionable financial insights. If you want to know more, or jump into the debate, don’t hesitate to reach out.