Climate risk: why historical data is not enough

What is historical data and why it matters

Historical climate data refers to any data on past climate conditions and events - from yesterday to millions of years ago. This data creates a climate “story” for the location or asset being analyzed. Paleoclimatologists study major climate shifts in the far past, such as ice ages, to theorize on the possible repercussions of climate changes today. But recent records are used to understand a location’s current risk.

For example, the Hunan province in China has a long history of flooding and, since rainfall continues to intensify, we expect flooding to worsen. Another example is California, where there is a long history of wildfires. As drought years have become more regular, so has the “fire season” even in the previously less fire-prone areas in Northern California.

These types of historical data have traditionally been used in areas directly affected by climate change, for example insurance and land-use planning. But today, many types kinds of business - from IT to agriculture, from mining to finance - are realizing that climate needs to be part of their overarching strategy.

This change is influenced by a sharp increase in climate-related disasters (22 for the US in 2020 vs 5 in 2000), new regulation to comply with the Paris Accord, and even COVID-19 .

Yet when it comes to the changing climate, analysis of historical data gives us only a part of the picture.

Bingley Boxing Day Floods 2015 - White Horse Pub Bingley, UK. Photo by Chris Gallagher on Unsplash

History teaches us something (but not everything)

Insurers use historical weather data to understand when and where weather conditions are most likely to cause damage. This helps with response operations and to predict financial impact. Historical data is also used to understand the possible damage to similar types of areas and therefore encourage better risk management.

But what if the climate events are occurring more often and in a different pattern than before? What if the damages we’re seeing are much worse than anything previously on record?

The 10 warmest years on record have all occurred since 2005 with 7 of the 10 happening after 2014.

2020 was considered the worst year for flooding throughout Asia, with over $32 billion dollars of damage in China alone and climate-related disasters cost the US almost $100 billion.

Also, in the last month NASA has confirmed what scientists have suspected for years - that the changes in climate we are now experiencing are human caused.

”Paleoclimate evidence reveals that current warming is occurring roughly ten times faster than the average rate of ice-age-recovery warming. Carbon dioxide from human activity is increasing more than 250 times faster than it did from natural sources after the last Ice Age.” (NASA)

The sharp increases in climate events and more unpredictable weather patterns demonstrate that historical data alone will severely underestimate the likelihood of future extreme climate events. In 2020, Stanford published a report about this.

“Scientists trying to isolate the influence of human-caused climate change on the probability and/or severity of individual weather events have faced two major obstacles. There are relatively few such events in the historical record, making verification difficult, and global warming is changing the atmosphere and ocean in ways that may have already affected the odds of extreme weather conditions.”

In other words, historical data can tell us that flooding in China has been an issue for hundreds of years. What it won’t tell us - how much worse it will get if we continue business as usual or what the decrease in loss could be if steps are taken to mitigate this risk.

Forward-looking data - into the unknown

Of course, we can’t know what the future will bring. But we now have the tools to create the right kind of climate intelligence so we can properly assess future risks to our assets. Thanks to organizations like NASA and the European Space Agency, as well as quite a few corporates, satellites have not only photographed the entire world but they also measured important climate indicators such as a locale’s amount of burnable land, changing land cover, the rate of polar ice melt, and sea level rise. By analyzing these and other large datasets, geospatial machine learning and AI can learn and project impending physical risks.

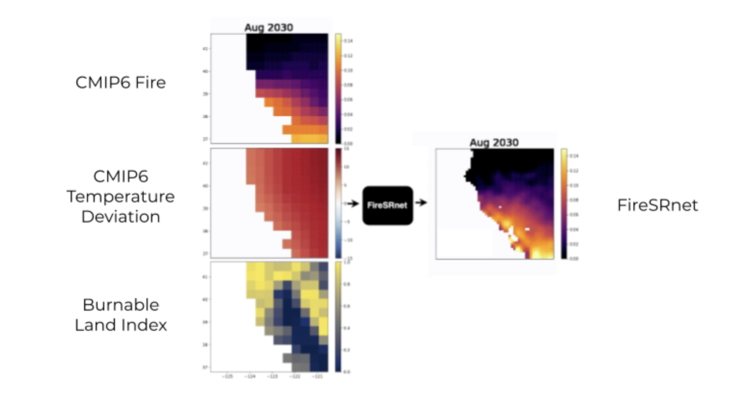

An example of this can be found In our blog about our FireSRnet (super resolution) capability. To evaluate the potential increase of wildfires in Northern California, we utilized three data sets: satellite imagery, temperature and land cover (potential burnable land) information. We then analyzed these through our machine learning algorithms in order to view the fire risk, close-up, to individual, local assets or properties in the area. We then can apply this learning to how we assess future risk exposure from wildfires at higher spatial resolution.

Three different datasets run through our Machine Learning algorithms produce more accurate information about the future of a location or asset.

At Sust Global, we compare the projected footprint of such hazards over the coming decade with the footprints in the recent past, say 20 years. For example, we are seeing increasing fires in Siberia and Alaska where wildfire wouldn’t seem likely if we looked at historic data from 1980-2010.

When assessing such risks, one needs to understand that climate is a global phenomena, with global impacts. This could have a significant impact on human settlement and industrial development in the coming decades as the planet gets warmer and such hazards manifest more often.

The way to properly assess current and future risk is to utilize the multiple data streams we now have available, then to analyze and output this information in a format that is actionable so businesses make the right decisions for their future.

To learn more about our product and how it can help your business, reach out to us by filling in the form below. We’d love to talk to you.